26 February 2025

The flurry of AI deep research launched at the beginning of 2025 has significant consequences for knowledge workers – and the global economy. flow shares insights from Deutsche Bank Research’s paper that identifies winners and losers – and AI’s capacity for longer-term self-improvement

MINUTES min read

On 20 January 2025, the Chinese artificial intelligence (AI) start-up laboratory DeepSeek launched a free, open-source, large language model (LLM) that it claims took two months and less than US$6m to train, using reduced-capacity chips from Nvidia known as H800s.1

This was significant, notes Deutsche Bank Research’s Adrian Cox in the Thematic Research paper What AI deep research means for you, because these DeepSeek AI models have “exposed a number of emerging but underappreciated themes in AI”. These are:

- Innovative alternatives to the brute force of chips;

- The potential of cheap, open-source, small language models; and

- The underestimated capabilities of Chinese companies.

The paper points out that on the other side of the world, OpenAI unveiled “deep research” on 2 February,2 eight weeks after Google had released its own “slightly less capable version” in December 2024, and this was followed by a similar open-source model a day later.3

While deep research has long been anticipated by those concerned that AI agents could reduce jobs, there has been little sense of this being a problem for the right now – but rather more a long-term trend to keep an eye on. Deutsche Bank Research’s Cox’s paper maintains that the OpenAI deep research launch will have “profound consequences for knowledge work and the economy”. The agents, he explains, use time-consuming reasoning to comb through huge amounts of online information and complete multi-step research tasks. This could, for example, include writing research reports at a more advanced level than those put out by existing chatbots.

This article summarises what AI deep research means for the world, drawing on Deutsche Bank Research’s own tests of deep research on the time taken to produce a 9,000-word report citing 22 sources with links assessing the impact of new US steel and aluminium tariffs (a topic covered in a separate flow article that took around eight hours to get right, with the research reports referenced combining months of work from the commodities team).

Likely consequences of AI deep research

Having tested OpenAI’s deep research, Cox sees three likely consequences:

- Cognitive work will change fast;

- Advanced chips will be in high demand; and

- AI is one step closer to improving itself.

Changing face of cognitive work

There is a risk that many humans will be deskilled, because human actors will be rewarded for asking their AI agents the right questions in the right way, and then using their judgement to assess the answers with the rest of the cognitive process offloaded. Winners will be those with least experience, whose performance will be lifted to a new minimum level, and those with the most experience who know where to focus. “Those in the middle will struggle to add value,” says the report. The other problem is a breakdown of the pipeline of humans who have worked with the underlying information from first principles, which could make it hard to judge AI-generated output.

Demand for advanced chips

Despite the 17% one-day drop on 27 January in chip manufacturer Nvidia’s stock4 “on the mistaken belief that the latest chips were no longer needed for AI,” Cox says that deep research shows “you can never have too much computing power”. However, deep research uses that power more efficiently, with chain-of-thought prompting and the inference stage when the model is running. He sees two branches of AI tools emerging – one stream into cheap small language models that can fit on a phone and the other into ever larger language models, such as those needed to power deep research models in the cloud.

AI self-improvement

Cox explains how deep research shows what happens when an AI system combines both a reasoning model, which is enabled to break a task down into steps rather than merely generating words, and an agent with tools, such a long working memory and capacity to search the internet.

“We are a step closer to self-coding”

Cox observes that it has been a longstanding dream of AI researchers to develop AI that can iteratively improve on itself until it achieves a hard take-off through artificial general intelligence (AGI) en route to super intelligence. “We are a step closer to self-coding,” he says.

Performance observations

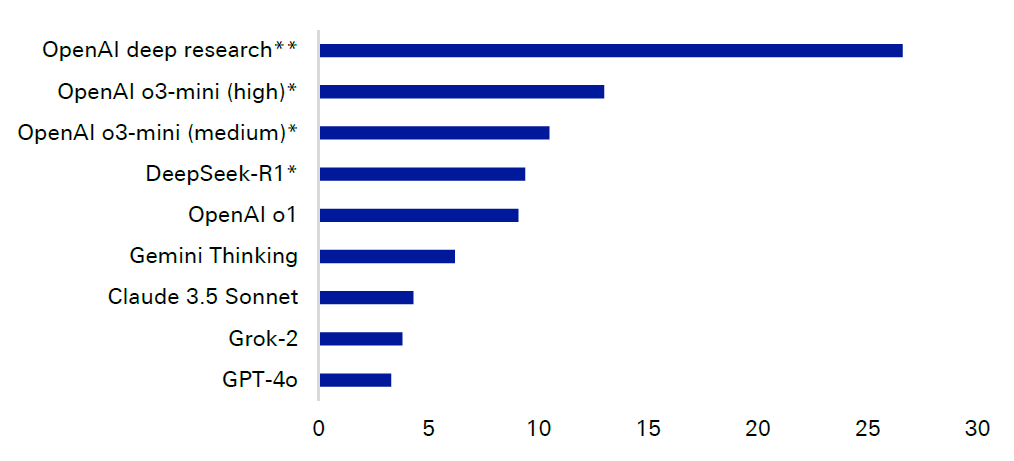

Figure 1: Open AI’s deep research significantly outperforms earlier models

Source: OpenAI "Introducing deep research", Feb 2, 2025, Deutsche Bank

*Model is not multi-modal, evaluated on text-only subset.

**With browsing and Python tools

Figure 1 shows how OpenAI’s deep research significantly outperforms earlier models in the new, tough testing multimodal benchmark Humanity’s Last Exam (HLE), scoring 26.6% – twice that of any predecessor. US business publication Forbes reported on 12 February that the HLE comprises more than 3,000 questions across hundreds of subjects ranging from rocket science to analytic philosophy.5 The test also revealed that just because a task takes a human longer, it does not mean the AI model will find it harder. “The pass rate for OpenAI’s model in internal tests generally declined for more economically valuable tasks but it actually began to slightly improve on tasks that take expert humans longer than three or four hours,” reported Cox, citing company information.

For now, OpenAI deep research is only available to ChatGPT Pro users on a US$200 per month plan in the US, UK, Switzerland, and the EEA, according to OpenAI.

The Deutsche Bank Research test, to produce a report on new US steel and aluminium tariffs in eight minutes, was “not perfect”, concedes Cox, and was not entirely able to shake off the inherent weaknesses of generative AI, such as a lack of context. “In our tariff example, we needed a second attempt to get it to address the most recent round of tariffs,” he adds. It did this successfully.

While it is clearly still early days for deep research tools, these launches early in 2025 suggest that they, together with the underlying LLMs that power them, “will continue to improve”. Cox concludes, “only time will tell how far they will eventually move beyond rehashing other people’s ideas to generating entirely new concepts altogether”.

A brave new world beckons indeed.

Deutsche Bank Research report referenced

What AI Deep research means for you, by Adrian Cox, Research Analyst, Deutsche Bank (12 February 2025)

Sources

1 See dbresearch.com

2 See techcrunch.com

3 See opentools.ai

4 See forbes.com

5 See fortune.com