January 2021

Corporate clients are demanding insights and predictive analytics built on high quality data from their bank. There is a good reason for this − many have a global footprint, complex flows and a large product suite. flow’s Clarissa Dann and Deutsche Bank’s Cian Murchu share the Deutsche Bank journey

According to European Commission statistics, the value of the data economy in its 27 EU countries, together with that of the UK, is estimated to exceed €440bn in 2020.1 It defines the data economy as representing the overall impacts of the data market on the economy as a whole. It involves the generation, collection, storage, processing, distribution, analysis elaboration, delivery, and exploitation of data enabled by digital technologies.

What does this mean for corporate and business financial services provision? In short − the winners will be those with the best analytic competencies backed by strong data quality. Organisations are becoming more data centric rather than application or process-centric as they rely more and more on data for decision-making.

This article provides an overview of Deutsche Bank’s journey with a particular focus on how the Corporate Bank harnessed data to support its corporate clients.

Regulatory drivers

Inefficient technology architecture, multiple sources of data, inconsistent taxonomies, numerous data formats, disparate processes, lack of golden source reference data adoption and lack of data ownership are common root causes of data quality issues.

Fines from regulators will often have their origins in incorrect and inaccurate reporting – successive acquisitions bring with them incoming data sets that create difficulties when the acquired business becomes part of a larger enterprise. Problems have typically arisen from data truncations, lack of completeness, mapping issues and inconsistent data.

The other driving force for improved data frameworks comes from regulatory requirements such as Payment Services Directive 2 (PSD2) where payment service providers need a robust framework and structure within the data that satisfies new use cases. And to comply with the General Data Protection Regulation (GDPR), financial services providers have needed a much more granular grasp of specific sets of personal and private information. The other issue is that while good data sets can be used to drive strategic insights for clients, they must be used with awareness and controls for public and private side separation and for potential conflicts of interest. AI applications and ethics must also be controlled.

From a prudential regulatory perspective, the Basel Commission for Banking Supervision’s 14 Principles for data aggregation and risk reporting (BCBS Standard 239)2 is a framework that is actively used to assess firms’ approach to data quality. Regulators review firms’ approach with an eye to accuracy, completeness and timeliness. These are considered to be ‘hard’ checks, in that they can be defined by metrics.

Rethinking data management

As explained in the flow article, Tomorrow’s technology today, Deutsche Bank created a new Technology, Data and Innovation (TDI) division in October 2019 to get the technology transformation process underway by reducing administration overheads, taking further ownership of processes previously outsourced and building in-house engineering expertise. Its mission is to “provide and use the right common data, skills and tools for everyone to make decisions and enable innovative solutions that create value for clients and the Bank”.

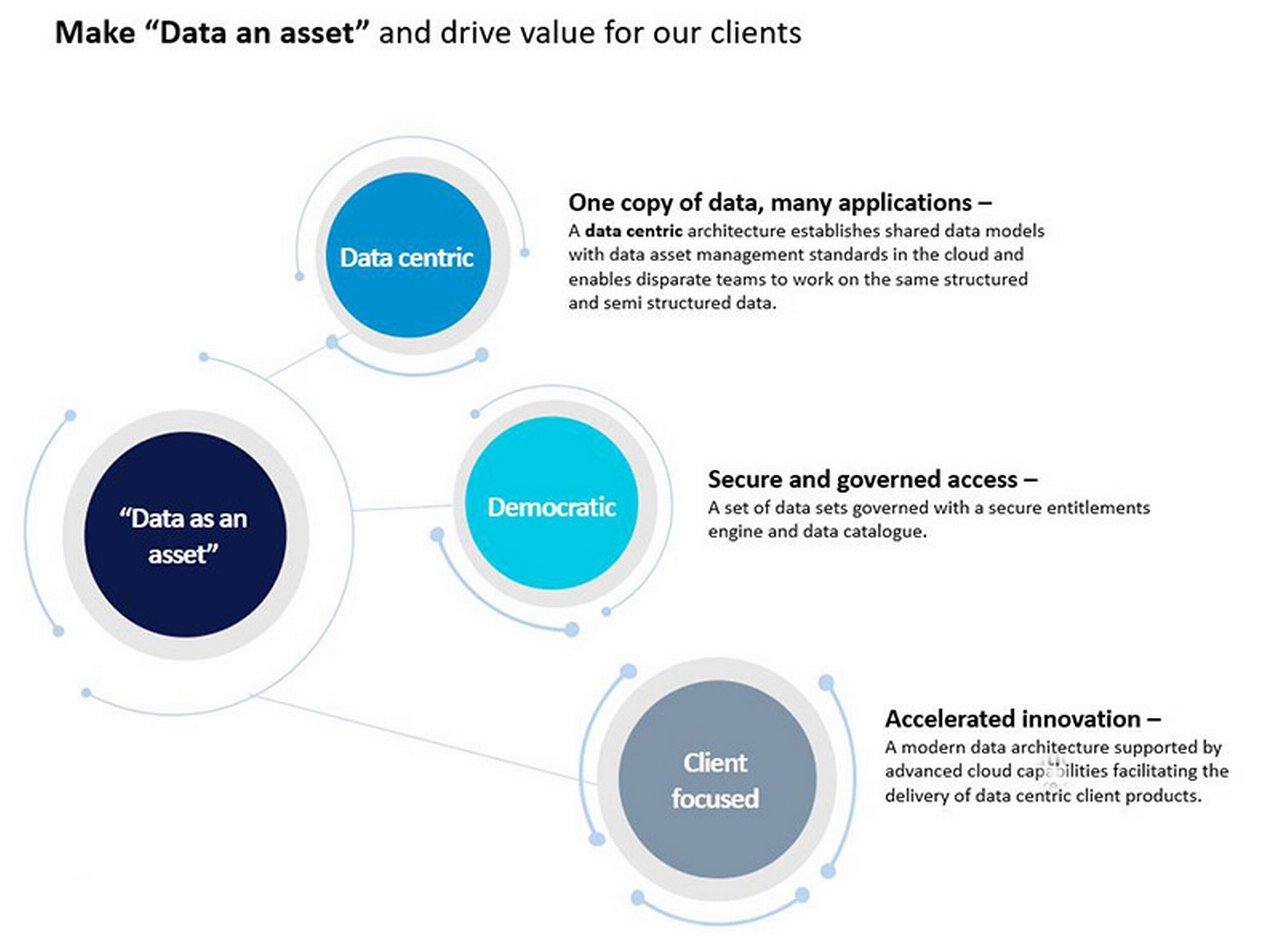

What does this look like when got right? Finding the balance between security and stability – coupled with the agility to deliver analytics and value that help customers is not easy, but something shaping the new competitive landscape (see Figure 1).

Figure 1: The data-focussed organisation – making data an asset

Source: Deutsche Bank

“Analytics help a bank understand what its clients need from partners in terms of liquidity management”

Not only are clients asking for increased transparency on financial and non-financial metrics, data-driven insights and recommendations, and harmonised information flows across banks; but corporate treasurers from large commercial clients now look to their banking partners to be data and technology innovation partners in addition to providing traditional banking services.

Banking partners, including Deutsche Bank are investing in analytics to examine where and how businesses can make efficiencies and run a more streamlined operation. They can highlight, for example, where cost is leaking from a business and where more growth can be squeezed from. Marc Recker, Head of Institutional Cash Management Product, Deutsche Bank notes how "these analytics can also help a bank understand what its clients need from partners in terms of liquidity management, cash optimisation and investment".

The CDO team works closely with Deutsche Bank Corporate Bank’s data office (Deutsche Bank Investment Bank and Deutsche Bank Private Bank also have their own data offices) to ensure that everything touching a client (data, cash, and digital platforms, reporting) is secure, intuitive and sustainable. But by having a remit across the Bank, the CDO ensures consistency between the offices in key areas such as establishing principles, policies and standards that need to apply across the enterprise.

“The Chief Data Office is an enterprise wide engine of value creation and our data quality strategy sits at the core of our objective to make “data an asset” and accelerate the development of data products for our clients,” says James Whale, Head of Data Quality Management, Chief Data Office, Deutsche Bank.

Rethinking data

In 2017, the CDO set about developing a data management strategy, investing in improved data governance and quality management. It sought to transform its data management function from a defensive tool that prevented financial crime and ensured client privacy, to a dynamic development tool that brings new insights and capabilities to clients. This included the following areas of focus:

- Data focussed talent and culture. This meant: improving the group-wide understanding of the critical need for investment, the visibility and understanding of the Bank’s data assets, providing a core pool of data analytics, modelling and engineering skills, and developing continuous learning programmes.

- Data architecture and tools. Interim and target (as you can’t get to where you need to be overnight) that represents future streamlined data landscape, development of standards, patterns and guidelines to support the migration of data assets to the cloud, as well as a content platform and dashboard that users could easily navigate.

- Data quality. This meant ensuring that the data flowing through the Bank was of the highest quality with controls in place so that any problems were addressed at source rather than downstream.

- Data lineage and lifecycle management. The ability to see what status the data flows are at for critical applications and reporting outcomes. Is the data “as built”? Or is it awaiting an update?

- Data analytics. This involved the establishment of a group-wide data portfolio for a consolidated view on all data-relevant projects (including and beyond governance projects), development of best-in-class privacy tools, right-time analytics and real-time processing capabilities to support priority business use cases such as payments innovation, transaction monitoring, KYC and 360 view of the customer, underpinned by best practice frameworks and standards.

“Data is a living breathing thing and never perfect, but when it stops someone from doing something, it becomes an issue”

Corporate Bank implementation

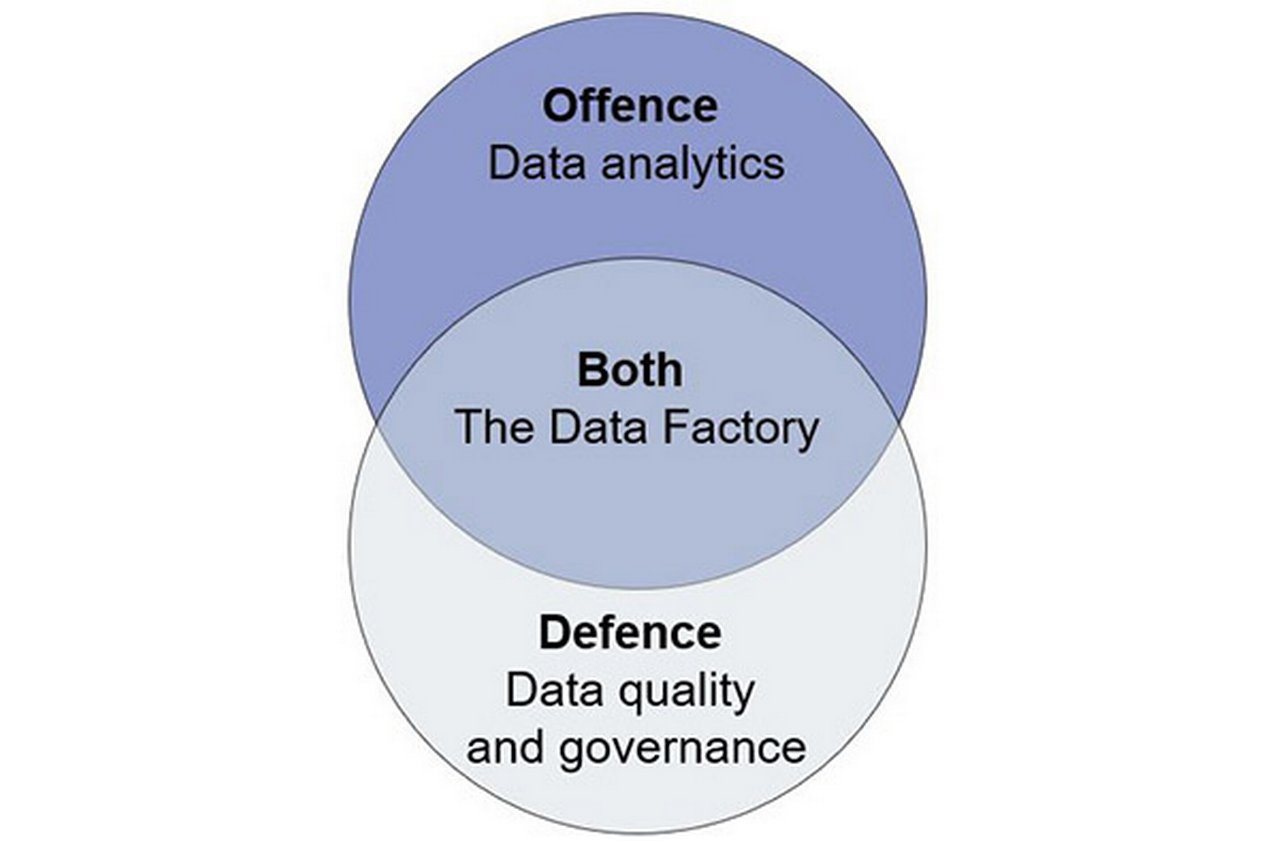

Figure 2: Offensive and defensive data strategy

David Gleason is the Corporate Bank’s Chief Data Officer and joined Deutsche Bank in September 2018 having held data strategy, governance and analytics roles at J.P. Morgan Chase and BNY Mellon.

Upon his arrival, not only had the Data Quality Platform got underway in the Corporate Bank but at the same time there was a wider Bank data governance overhaul where the CDO was setting up and driving some of the tooling and processes. “A lot of work was in place around understanding the data requirements of risk, finance, the treasury and AFC, making sure we knew where to get that data and putting controls around it,” he reflects. “My goal coming in was not only to continue and grow that work but also to introduce the “offensive” (proactive) side of the equation to that,” adds Gleason (see Figure 2). This meant taking everything the Bank had already been doing around data and process improvement and using this to create better capabilities.

In terms of maintaining data integrity and quality a focus confined to what Gleason calls ”defensive problems” means that “once the fires are out there is less incentive for investment”, kicking off a feast and famine cycle.

In this defence-only world, every time a data analyst performs a new analytic capability they have to solve all the problems associated with obtaining, understanding, and preparing the data needed for that enquiry. Then another analyst will come along with a different enquiry calling on the same dataset and have to tidy up all over again. Gleason cites the received wisdom that the average data scientist across all industries spends 85% to 89% of their time finding and fixing data “with only 5% of the time spent on the tasks you are paying them for”.3 This was borne out in a Corporate Bank pilot where data scientists spent almost 80% of their time on mundane data gathering and prepping activities rather than the real business analytics, value add activities.

His vision for a balanced offense-defence approach is to harness all the work that goes into “defensive” data governance, and make it available for proactive analytics work. This would mean less times is spent solving the same problems in isolation over and over again and the investment is channelled into repositories and architecture that works proactively as well.

Enter the Data Quality Platform and the Data Factory.

The Data Quality Platform

STargets were set to increase data quality transparency and reduce ad hoc adjustments for critical processes such as credit risk, balance sheet and liquidity management. This was something of a mammoth task given the Corporate Bank’s multinational and multi-divisional structure where data is captured differently across different systems. It was agreed that a successful solution had to (in addition to meeting regulatory requirements):

- Create a positive experience for the Corporate Bank’s internal customers

- Improve data quality to reduce ad-hoc adjustments

- Delivery significant operational efficiencies

- Enhance decision-making across financial and non-financial risk

- Enrich customer and risk insights that improve new products and services

The first phase of getting the Data Quality Platform off the ground was to understand the data issues raised by the following areas of the Corporate Bank:

- Businesses (for example Cash Management, Trade Finance and Lending, Securities Services)

- Finance, treasury and risk support functions (consumers of data)

When issues raised by these two groups are resolved, this reduces problems raised by external auditors.

Quality issues discussed included:

- Timeliness – was the data available when needed?

- Completeness – Did it fulfill expectations on comprehensiveness? Did the user receive all the transactions in an End of Day file? Were all the required fields/attributes populated?

- Accuracy – How does the data reflect reality? Is the client on a transaction the right client?

Once in place, this Phase one technology (DQ Direct) provided the ability to report data issues for further analysis, theming and remediation.

The second phase of the Data Quality Platform is the inclusion of detective controls or ‘Data Contracts’ that act as a bridge between the Corporate Bank (the data producer) and the consumers (the Group’s Treasury, Risk and Finance teams). Launched late 2019, this proactively used governance tools to identify and help remediate potential quality issues – going back to Gleason’s two-pronged vision of securing defensive and proactive data.

In parallel, Corporate Bank, through its rigorous engagement with Front Office and Operations Management, is changing the culture of data ownership. “While we are continuing to make progress in improving the quality of our data, there is also a clear shift in data ownership with Front Office and Operations managers taking an active role in prioritising and managing their quality issues” says Sam Pandya, Data Quality Lead, Corporate Bank, Deutsche Bank.

The Data Factory

“Data lives in the applications where it is first collected and used,” explains Gleason. The Bank, he continues, has good controls around those applications and nobody wants to disrupt what is already working well. The primary users of the data are the businesses processing cash and performing transactions, but the secondary usage of the data for analytics and financial reporting is just as important, he adds.

When pulling data from multiple countries and systems, there could be local nuances to the way that data has been captured and stored. Codes used to describe account statuses might not be universally agreed and there could be different business rules on how the data was captured. While this works perfectly in those systems at a local level, the difficulty arises when the data scientist tries to aggregate it globally. At this point universal definitions are needed – for example what an active account looks like, what channel is acceptable for onboarding a client, etc.

“Our goal with the data factory is to make that the place where we bring data from the applications, measure its quality, and apply whatever corrective measures we can to enrich it and curate it. It is a lot easier for me to build a Lego castle if I have got all the pieces in different bins by shape, colour and size than if it is all in one big bucket,” says Gleason.

“This aspect of curation is really important”, he emphasises. He compares this to what a museum curator does – cataloguing exhibits, and analysing provenance. In addition, early warning systems are built in using data validations and quality rules that compare the data component in question automatically against given criteria. These validations can range from simple rules like “this field should never be blank” or “the account opening date should never be earlier than the client onboarding data of the linked client record,” to more business-focused rules such as “alert me if the volume of transactions in this category varies by more than 3% day over day”.

“A lot of the work we were recognised for in that TMI award was creating transparency and turning that into action,” he reflects, adding “Data living breathing thing and never perfect. But when it stops someone from doing something, it becomes an issue we need to address.”

The Corporate Bank’s Data Factory is a work in progress, configured as a hybrid solution with components housed on the physical Deutsche Bank platform as well as components build on the public Cloud. “This Cloud capability is critical for being able to expose client-facing analytics and collaborating with them on analytic solutions. Hosting data in the Cloud would allow us to grant our clients access to analytic workspaces where we can collaborate with them,” says Gleason.

“Democratising data and a data driven culture are about upskilling and making data a core part of everyone’s role, not just the data scientists”

Towards competitive advantage

Our strategy is to provide and use the right common data, skills and tools for everyone to make decisions and enable innovative solutions that create value for clients,” says Deutsche Bank’s Tom Jenkins Group Head, Data Quality & Governance. We will achieve this, he continues, by making it possible to find, access and use data in a secure and governed manner. “This is what we mean by ‘democratising data’; we make data a core part of everyone’s role, not just the data scientists. We ensure the right people in the right roles have access to the right data to carry out their work. This is what will give us the competitive edge supported by advanced cloud capabilities with our partner Google heralding a new age of data centric client products and services.”

On 21 January 2021, Deutsche Bank was awarded the TMI Best Data Management Innovation Solution for the development of a comprehensive Data Quality Platform developed within the Corporate Bank in partnership with the CDO. Above is Deutsche Bank’s Tom Jenkins giving one of the acceptance speeches at the virtual awards ceremony, which can be viewed here

“In banking, the crux of intelligent decision-making lies in data quality. Good quality data results in innovative products, great client service, efficient operations, decreased risk of fraud and accurate financial and regulatory reporting, explains Jennifer Courant, Chief Data Officer, Deutsche Bank. Regarding the TMI award (see above), she adds, “We are delighted to win this award and it recognises the data management solution developed through the partnership between the Corporate Bank and Chief Data Office as sector-leading.”

Clarissa Dann is Editorial Director, Deutsche Bank Corporate Bank Marketing, Cian Murchu is Head of Data Quality Solutions at Deutsche Bank

Sources

1 See https://bit.ly/3osycUa at ec.europa.eu

2 See https://bit.ly/39meQM1 at bis.org

3 See https://bit.ly/3iOLlpu at forbes.com

You might be interested in

TECHNOLOGY

Blockchain and corporates: unleashing potential Blockchain and corporates: unleashing potential

Distributed ledger technology has moved from a concept to providing real benefits to industries ranging from insurance to diamonds, which were explored in a recent Deutsche Bank webinar

TECHNOLOGY, CASH MANAGEMENT

From the engine room From the engine room

Almost overnight, Deutsche Bank relocated more than 65.000 of its employees to working from home locations ahead of Covid-19 lockdown. flow reports on operational resilience and business continuity management

Cash management, Technology {icon-book}

Automation should come first, AI will follow Automation should come first, AI will follow

Treasurers will benefit from AI, but there are many issues to be fixed before thinking about using this technology, François Masquelier, Chair of the European Association of Corporate Treasurers (EACT) believes. What should intermediary steps look like?